Technology Excellence

Microsoft Responsible AI Toolbox: Ensuring Ethical AI Development and Implementation

By Disha Mundhe

Published on June 22, 2025

Introduction

Artificial Intelligence (AI) is no longer confined to futuristic visions—it’s powering decisions in healthcare, finance, retail, education, and countless other industries. From chatbots handling customer inquiries to algorithms influencing loan approvals, AI systems are rapidly reshaping the way businesses operate.

But with this growing influence comes a critical responsibility: ensuring AI is developed and used ethically.

As organizations race to adopt AI, many face a daunting challenge—how to build systems that are not only innovative, but also fair, transparent, and accountable. Biased algorithms, opaque decision-making, and regulatory uncertainty can lead to serious consequences, from public backlash to legal penalties.

This is where Microsoft’s Responsible AI ecosystem comes into play. Through a comprehensive suite of tools, frameworks, and governance practices, Microsoft empowers developers and enterprises to embed responsibility at every stage of the AI lifecycle. These solutions help businesses meet evolving global regulations, reduce risk, and—most importantly—build AI systems that people can trust.

In this blog, we’ll explore how Microsoft’s Responsible AI tools support ethical development, prevent harm, and create long-term value for organizations and society alike.

Shape

The Imperative for Responsible AI

The value of Responsible AI cannot be overstated. Beyond ticking compliance checkboxes, a thoughtful approach to AI ethics yields significant strategic benefits:

• Building Trust: Transparent and equitable AI fosters trust among consumers, employees, and partners.

• Risk Mitigation: Ethical AI deployment acts as a safeguard against discriminatory outcomes, legal liabilities, and reputational harm.

• Global Compliance: Regulations such as the GDPR, and Australia’s AI Ethics Principles demand demonstrable accountability and fairness.

• Social Good: Most importantly, responsible AI should uplift society, reduce inequalities, and prevent the amplification of existing biases.

Let’s now delve into the core tools and strategies Microsoft offers to make this vision a reality.

1. Identifying and Mitigating Bias with Microsoft’s Error Analysis Tools

AI models are only as good as the data they’re trained on—and unfortunately, that data can often reflect real-world biases. Left unchecked, these biases can lead to AI systems that produce unjust or even discriminatory outcomes. Microsoft addresses this challenge with powerful error analysis tools designed to surface and address hidden biases.

Key Tools for Bias Detection and Error Analysis

- InterpretML: A powerful open-source library that provides model interpretability through:

- Feature importance analysis

- Partial dependence plots

- SHAP (SHapley Additive exPlanations) values for understanding individual predictions

- Fairlearn: This toolkit zeroes in on fairness, enabling developers to:

- Evaluate fairness across sensitive attributes like race, gender, or age

- Apply mitigation techniques to reduce disparities

- Use interactive visualizations to explore fairness trade-offs

- Azure Machine Learning Integration: Microsoft has embedded these tools into Azure ML, making it easier for developers to:

- Identify cohorts with high error rates

- Analyze model behavior across multiple dimensions

- Compare outcomes between demographic groups

These tools go beyond gut instinct or subjective judgment—they enable data-driven evaluations of fairness and help developers course-correct models before deployment.

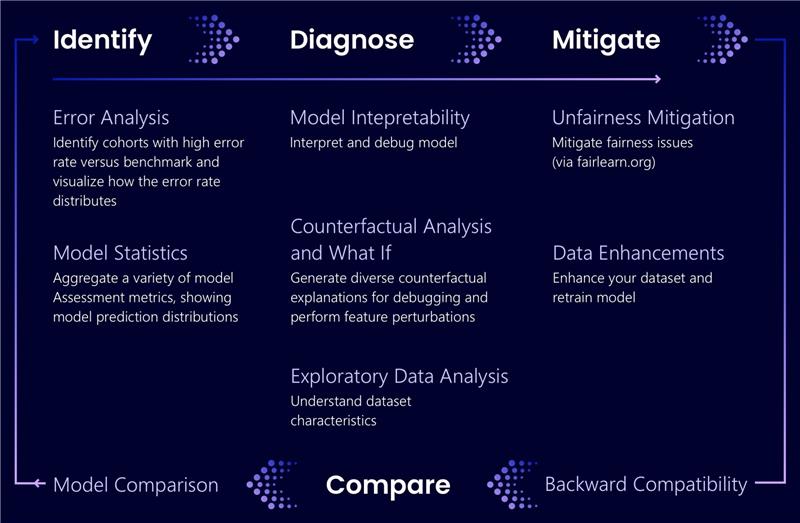

2. The Responsible AI Dashboard: A Unified View into Ethical AI

Image Source: Microsoft Responsible AI Toolbox

The Responsible AI Dashboard brings together multiple ethical AI components into a centralized, visual interface. It acts as a control center for monitoring AI systems throughout their entire lifecycle—from model training to post-deployment review.

- What the Dashboard Includes

• Model Statistics: Summary metrics for accuracy, fairness, and reliability

• Data Explorer: Assess the quality and diversity of training data

• Interpretability: Provides multiple views into a model’s behavior

• Error and Causal Analysis: Understand the root causes of errors

• Counterfactual Analysis: Test “what-if” scenarios to determine outcome sensitivity

• Casual Interface: Identify the features that have the most direct effect on your outcome of interest.

- Best Practices for Dashboard Implementation

• Involve Diverse Stakeholders: Include voices from legal, HR, marketing, and operations in the dashboard’s design and review

• Customize Metrics: Align analysis with your industry and use-case (e.g., healthcare, finance, retail)

• Establish Action Plans: Ensure identified issues trigger defined remediation workflows

By surfacing these insights early and often, teams can intervene before a model causes harm in the real world. This makes AI development proactive, not reactive—ultimately saving time, money, and reputational capital.

3. Aligning AI with Organizational Values and Legal Requirements

While tools are vital, technology alone doesn’t guarantee responsibility. Ethics must be woven into an organization’s AI philosophy, governance, and compliance framework. Microsoft provides both philosophical guidance and practical resources to help businesses do just that.

- Microsoft’s Responsible AI Principles

• Fairness – Treat everyone equally and without discrimination

• Reliability and Safety – Ensure consistent, dependable performance

• Privacy and Security – Respect user privacy and safeguard data

• Inclusiveness – Empower diverse users and perspectives

• Transparency – Make systems and decisions understandable

• Accountability – Hold humans responsible for AI outcomes

- Compliance & Governance Tools

• Compliance documentation showing how Azure AI services meet legal standards

• Audit trails to document development decisions and data lineage

• Regulatory templates and checklists to support local and global legal obligations

• Guides and whitepapers tailored to regulations like GDPR, HIPAA, and the EU AI Act

One critical mechanism is the AI Impact Assessment, which organizations use to:

• Define the use case and potential impact

• Identify stakeholders and affected groups

• Assess risks and plan mitigations

• Document assumptions, limitations, and review timelines

This structured methodology helps ensure AI initiatives stay grounded in real-world ethics and operational accountability.

4. Practical Strategies for Responsible AI Adoption

So, how can businesses bring all of this together in practice? Microsoft recommends a multi-pronged strategy that balances culture, governance, and technical rigor.

- Establish Responsible AI Capabilities

• Train cross-functional teams in ethical AI principles

• Build internal champions to lead initiatives

• Create a governance board for oversight

• Develop escalation processes for ethical concerns

- Integrate Ethics into Development Pipelines

• Embed fairness assessments in CI/CD workflows

• Include ethical questions in code reviews

• Track non-technical KPIs such as fairness, explainability, and user trust

• Create centralized documentation repositories for accountability

- Enable Continuous Monitoring & Feedback Loops

• Monitor deployed models using telemetry and behavioral analytics

• Establish feedback channels for users to report anomalies

• Periodically reassess models as data and conditions evolve

• Define response playbooks for ethical escalations

5. How Ambiment Can Support Your Responsible AI Journey

At Ambiment, we understand that adopting Responsible AI can seem overwhelming—especially when it involves legal risks, stakeholder pressure, and fast-changing technologies. That’s where we step in.

We offer end-to-end guidance and implementation support for organizations seeking to embed Responsible AI using Microsoft’s frameworks. From conducting AI impact assessments to setting up dashboards and training teams, Ambiment ensures you’re not just compliant—but also competitive and trusted.

Our expertise lies in:

• Customizing Microsoft’s Responsible AI tools to your business needs

• Building cross-functional alignment between tech and compliance

• Integrating ethical review into agile development workflows

• Driving cultural transformation that sustains responsible innovation

Partner with us to turn ethical AI into a strategic advantage.

For more information or to explore how we can help, get in touch with us. We believe even one conversation can lead to something amazing—and we’d love to hear from you!